In 1950, mathematician, computer scientist, logician and overall smart guy Alan Turing proposed the idea that if a human observed a (text-only) conversation between another human and a bot and couldn’t tell them apart, the machine passes what we now call the Turing test.

We’re not there yet with today’s artificial intelligence (AI) chatbots. But Microsoft says GPT-4, the latest version of OpenAI’s software, shows “human-like reasoning.”

A spark of genius

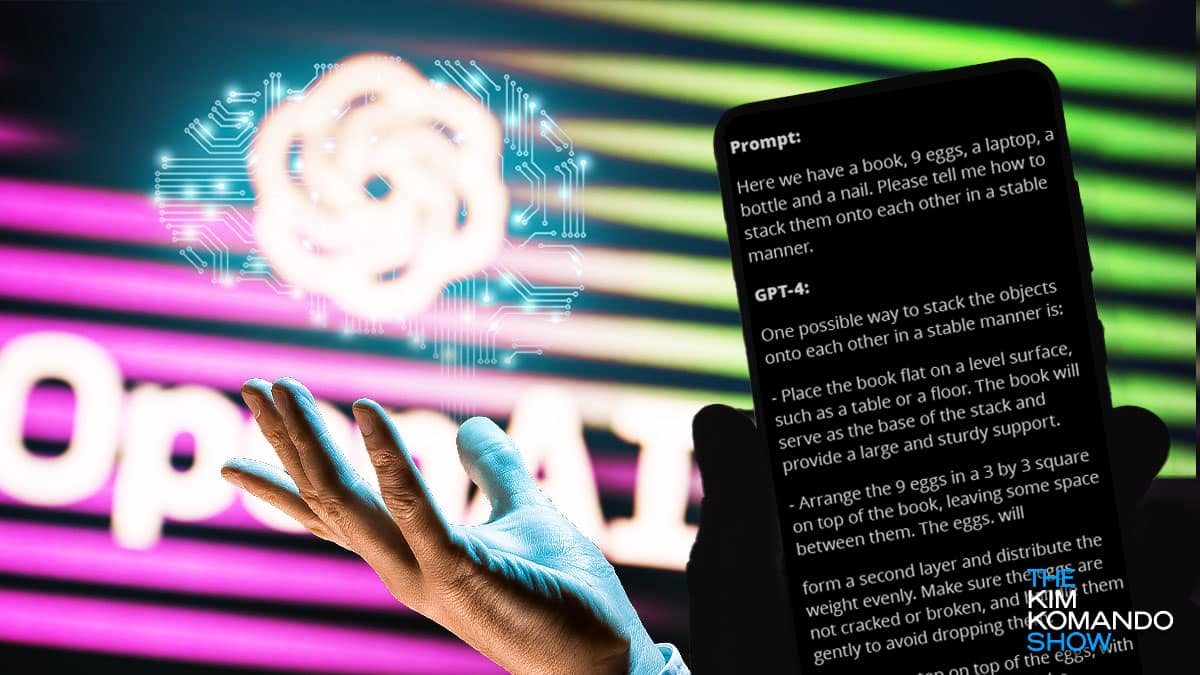

Here’s the prompt that led Microsoft researchers to their conclusion: Bing Chat (running on GPT-4) was asked to “stack a book, nine eggs, a laptop, a bottle and a nail in a stable manner.”

As a human (yes, real human Kim writing this newsletter), I know you’d have to be careful with the eggs. But so did the bot. It recommended arranging things so the eggs wouldn’t crack … something researchers say only a human could understand.

OK, but even AI would know eggs can crack, right?

But would it really know what, exactly, was enough to break them and how to handle them correctly? Here’s what Bing Chat said:

“Make sure the eggs are not cracked or broken, and handle them gently to avoid dropping them.”

Not convinced?

Ray Kurzweil, Google’s Director of Engineering, has made a startling number of correct tech predictions in the past few decades. Everything from the rise of the internet to computers smart enough to beat people at chess.

A few years ago, he predicted that by 2029, computers would have human-level intelligence. By 2045, he says machines will be more intelligent than humans. The term for that is singularity. Now you know.

Looks like we’ve got 22 years to see if he was right.