A Texas A&M professor failed more than half his students for using ChatGPT to write their papers. The problem? Most of them probably didn’t.

Unfortunately, Dr. Jared Mumm is more of a “fail first, ask questions later” kinda guy.

His first mistake …

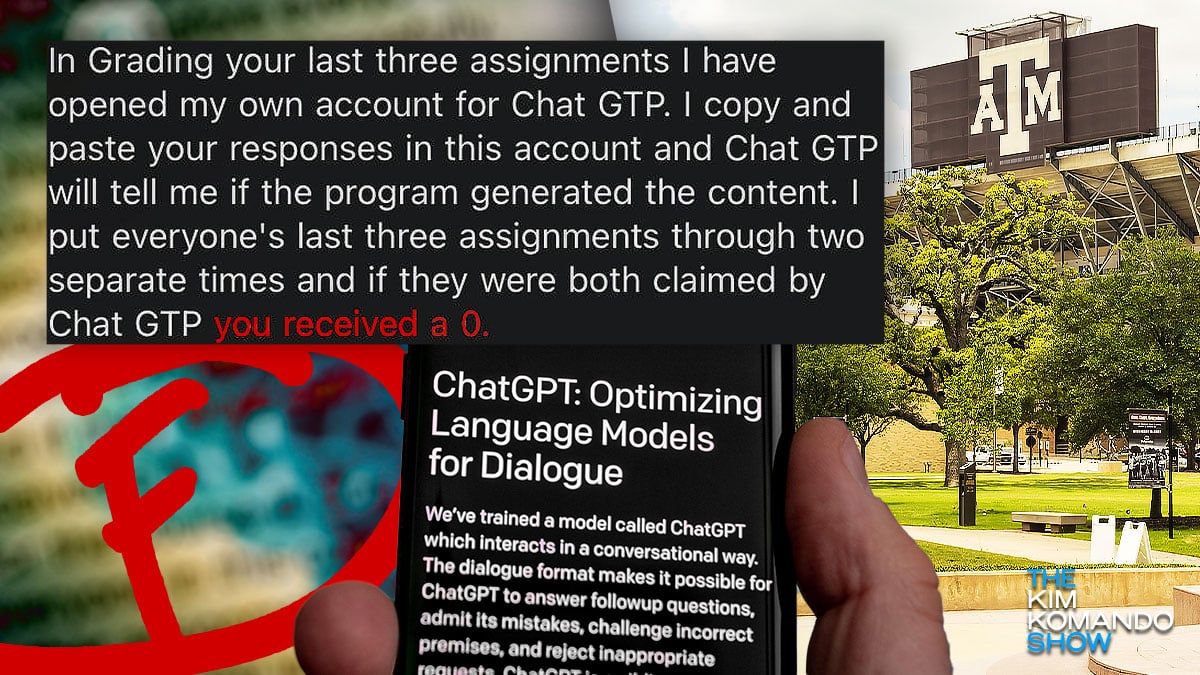

The agricultural sciences and natural resources professor says he submitted three essays from each student into ChatGPT to test whether they used it to write their assignments.

He put students’ work through “two separate times and if they were both claimed by ChatGPT, you received a 0.”

When asked about final grades, Dr. Mumm pulled a Gandalf: “You shall not pass.” Most of his students (who had already graduated) had their diplomas put on hold.

His second mistake …

Mess with someone’s diploma, and you can bet it’ll end up on social media. The Redditor says several students showed the professor their timestamped Google Docs to prove they wrote the papers.

He “ignored the emails, instead only replying on their grading software in the remarks: ‘I don’t grade AI bullsh*t.'”

One student, though, reportedly received an apology after proving they wrote their papers. Two more admitted to using the software, which of course, makes this all a lot messier for those who didn’t.

ChatGPT isn’t good at catching copycats

So, how can you tell if students are cheating? ChatGPT wasn’t designed to spot its own work (and sometimes, it comes back with false positives). Luckily, some programs are, including Winston AI and Content at Scale.

Oh, by the way, Texas A&M says they’re investigating and none of the students are barred from graduating.

If you’re a teacher or know one, this is definitely info worth passing along so you don’t make the same mistake.